A few years ago, fake videos felt like a future problem — something reserved for spy movies or experimental tech demos shown at conferences. Now, they appear on the same apps you use to check group chats or scroll through during lunch breaks.

When USC computer science professor Hao Li warns that “People are already using the fact that deepfakes exist to discredit genuine video evidence. Even though there’s footage of you doing or saying something, you can say it was a deepfake and it’s very hard to prove otherwise,” he’s pointing to a problem that goes far beyond manipulated videos.

He’s describing a world where trust itself is under threat. Deepfakes are steadily blurring the line between fact and fiction, making it harder to rely on what we see and hear online. This shift creates fertile ground for large-scale misinformation and propaganda, regardless of how technically savvy someone is. That reality makes it essential for everyone — not just cybersecurity professionals — to understand how to protect themselves.

What Deepfakes Actually Are and Why They Work

At their core, deepfakes use artificial intelligence to create or alter audio, images, or videos so convincingly that they appear real. Think of those striking clips — some image-to-video based — you may have seen on X (formerly Twitter), TikTok, or Instagram, generated using tools like OpenAI’s Sora, Grok Imagine (xAI), DeepFaceLab, or DeepBrain.

Behind the scenes, these systems train on existing footage or voice samples, learning how to replicate facial expressions, movements, and speech patterns with unsettling accuracy.

What makes deepfakes effective isn’t only the technology — it’s psychology. People are conditioned to trust video more than text, and when something looks right, the brain fills in the gaps. Add emotional triggers such as outrage, fear, or curiosity, and skepticism quickly fades. This is why fake content spreads so easily when it reinforces what viewers already want to believe.

So how can you protect yourself?

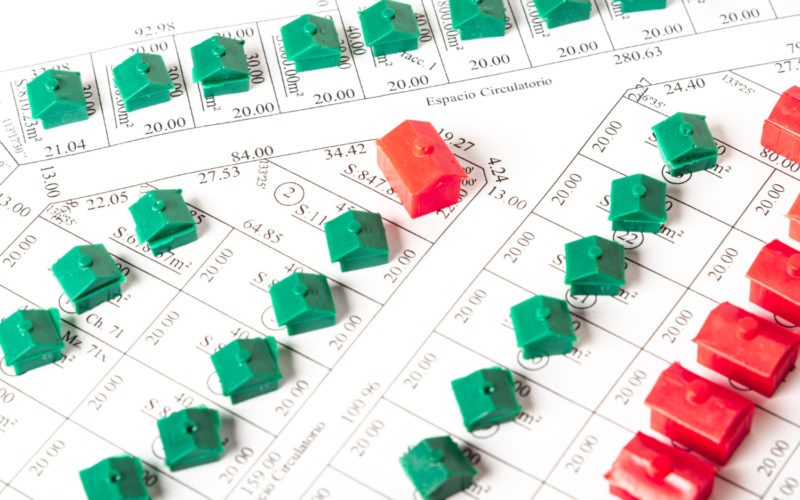

Limit How Much Raw Material You Put Online

This is one of the most overlooked defenses. Many people underestimate how easily personal photos, videos, and voice recordings can be collected and reused to create deepfakes. Others assume they’re not high-profile enough to be targeted.

In reality, you don’t need to be a public figure to attract attention. Bad actors rely on large volumes of data from everyday users to generate convincing synthetic media. The more content you share publicly, the more material exists for misuse.

Does that mean disappearing from the internet altogether? Of course not. It does mean being intentional about what you make publicly available. Well-lit videos where your face is clearly visible, your voice is clean, and your expressions are unobstructed provide ideal training data for AI tools.

If you already have content like this online, consider removing it or limiting access to close friends and family. Over time, those small changes can significantly reduce exposure.

Pay Attention to Audio, Not Just Video

Many deepfake scams now rely on audio alone — fake phone calls, voice notes, or voicemail messages that sound disturbingly authentic. If you’ve shared podcast clips, interviews, or long-form recordings publicly, that audio can be enough to clone your voice.

One practical safeguard is to avoid posting clean, uninterrupted voice samples unless absolutely necessary. Another is establishing verification habits with close contacts, such as code words or follow-up calls for sensitive requests.

If a message sounds urgent, emotional, or out of character, pause. Deepfake audio often depends on urgency to bypass common sense. Taking a moment to verify can make all the difference.

Learn the Subtle Red Flags, Even If They’re Fading

Although deepfakes continue to improve, many still reveal small inconsistencies. These might include facial movements that don’t quite match speech, unnatural blinking, lighting that shifts oddly, or audio that lacks natural pauses.

That said, visual cues alone aren’t enough. Context is your strongest defense. Ask whether the behavior matches the person’s usual tone, timing, and communication style. Most deepfakes fail when examined this way.

If a video call or message feels off, trust that instinct long enough to verify it elsewhere — call the person directly or reach out on another platform.

Protect Your Accounts Like Your Reputation Depends on It

In many cases, your reputation really does depend on your accounts. If someone gains access to them, they don’t need to fake your face to cause harm. A single misleading post can do serious damage.

Basic security practices still matter:

- Use strong, unique passwords for every account.

- Enable two-factor authentication wherever possible.

- Avoid logging in on public Wi-Fi without proper protection.

- Regularly review account activity for unfamiliar logins or connected apps.

Well-secured accounts make it much harder for attackers to impersonate you, whether through deepfakes or simpler forms of social engineering.

Keep Records That Establish Authenticity

Fake content spreads quickly, and by the time the truth surfaces, the damage is often already done. That’s why being able to prove authenticity matters.

Keep original copies of important videos, messages, or recordings, along with timestamps and metadata. If you’re a professional or public-facing creator, consider watermarking original content or using platforms that verify uploads at the time of posting. These steps create a clear digital trail.

While they won’t eliminate deepfakes, they can significantly strengthen your ability to defend yourself if disputes, impersonation, or blackmail arise.

Let’s Recap

Deepfakes are not just a technical issue, they’re a trust issue. As Hao Li points out, their most dangerous effect may be the growing ability to dismiss real evidence as fake. That shift fundamentally changes how truth operates online.

You don’t need to be an expert to protect yourself. Awareness, patience, and verification habits go a long way. If you ever become a target, act calmly but quickly: document everything, report the content to the relevant platform, and request takedowns under impersonation or misinformation policies. If your reputation, finances, or personal safety are at risk, seek legal advice.

Staying informed and intentional won’t eliminate the threat, but it will make you far harder to deceive.