UX testing used to be simple: get some users, watch them struggle with your website, and fix what broke. Now we have AI systems that can analyze thousands of users without anyone sitting in a lab. Both traditional UX testing and AI UX testing have their place, but figuring out which one to use (or how to use them together) can be confusing. Companies using platforms like aqua cloud are finding that smart AI usability testing doesn’t replace human insight; it amplifies it.

What Is Traditional UX Testing?

Traditional UX testing is exactly what it sounds like: putting real people in front of your product and watching what happens. It’s been around since the early days of software development, and for good reason: there’s something irreplaceable about seeing a confused user try to navigate your checkout process.

The core idea is human observation. You create scenarios, recruit participants, and watch them interact with your design while taking detailed notes. Sometimes users think out loud, sometimes they just grunt in frustration, but either way, you’re getting direct feedback from the people who actually use your product.

Methods for traditional UX testing

The traditional approach offers several time-tested methods, each designed to capture different aspects of user behavior. Usability testing sessions remain the gold standard, where participants complete specific tasks while researchers observe and take notes. Focus groups bring multiple users together to discuss their experiences and reactions. User interviews dive deeper into individual motivations and pain points that might not surface during task-based testing.

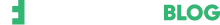

Beyond these conversational methods, there are more structured approaches:

- Card sorting exercises help understand how users mentally organize information

- A/B testing compares different design versions with real user groups

- Heuristic evaluations involve UX experts reviewing interfaces against established principles

- Eye-tracking studies measure exactly where users look and how their attention moves across the page

Benefits of the traditional approach

What makes traditional UX testing valuable isn’t just the data. It’s the context around that data. When you watch someone use your product, you see their facial expressions when they get confused. You hear the slight sigh of frustration before they click the wrong button. You can ask, “What were you thinking when you paused there?” and get an honest answer.

This human element provides insights that no algorithm can capture. Traditional testing excels at revealing the emotional journey users experience with your product. The benefits extend beyond just gathering feedback:

- Rich qualitative insights emerge from direct conversation and observation

- Contextual understanding explains the reasoning behind user decisions

- Flexibility to explore unexpected issues that surface during sessions

- Human empathy helps interpret subtle behavioral cues and emotional responses

- Follow-up questions can uncover deeper insights when interesting patterns emerge

Limitation of traditional UX testing

Traditional testing has real constraints, you might get insights from 15 users, but what about the other 10,000? The process takes weeks and costs a lot, plus people act differently when they know they’re being watched.

Other limitations create additional friction:

- Small sample sizes limit the statistical significance of findings

- Observer bias can influence both researcher questions and participant responses

- High costs for facilities, recruitment, and researcher time make frequent testing impractical

- Limited scalability across different user segments and geographic regions

- Subjective interpretation means different researchers might reach different conclusions from the same data

What Is AI Automated UX Testing?

AI automated UX testing flips the traditional model completely. Instead of bringing users into a lab, AI systems analyze how thousands of users naturally interact with your product in real-world conditions. These systems use machine learning to spot patterns that would be impossible for human researchers to identify manually.

The technology has become sophisticated enough to track not just clicks and scrolls, but also mouse hesitation patterns, reading sequences, and even facial expressions through webcam analysis. AI UX design testing platforms can process user sessions 24/7, building a comprehensive picture of user behavior without any human intervention.

AI Automated UX testing techniques and methods

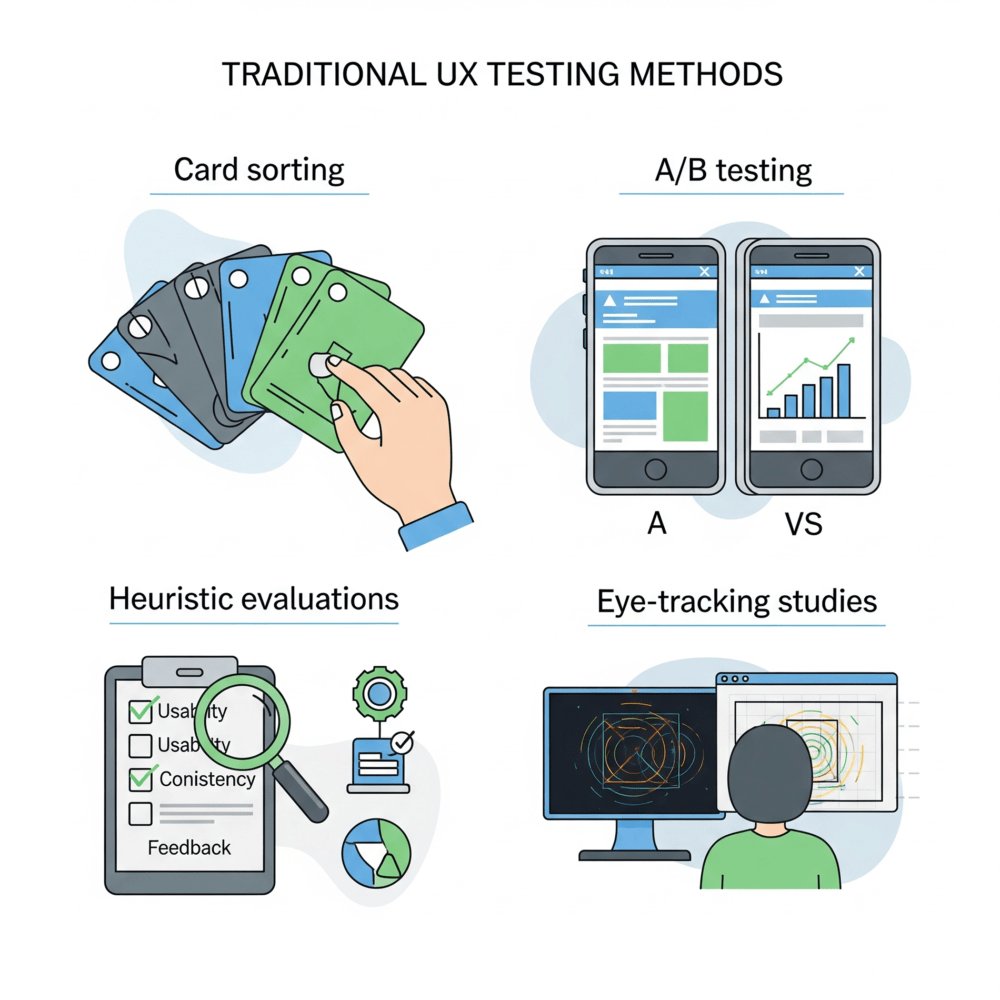

AI systems create heatmaps, replay user sessions, and predict problems before they happen. They can even analyze facial expressions and extract insights from user reviews. The most advanced techniques include:

- Behavioral pattern recognition that identifies common user paths and dropout points

- Real-time optimization that adjusts interfaces based on ongoing user behavior analysis

- Sentiment analysis of user comments and support tickets to identify pain points

- Conversion funnel analysis that automatically spots where users abandon processes

Benefits of AI approach

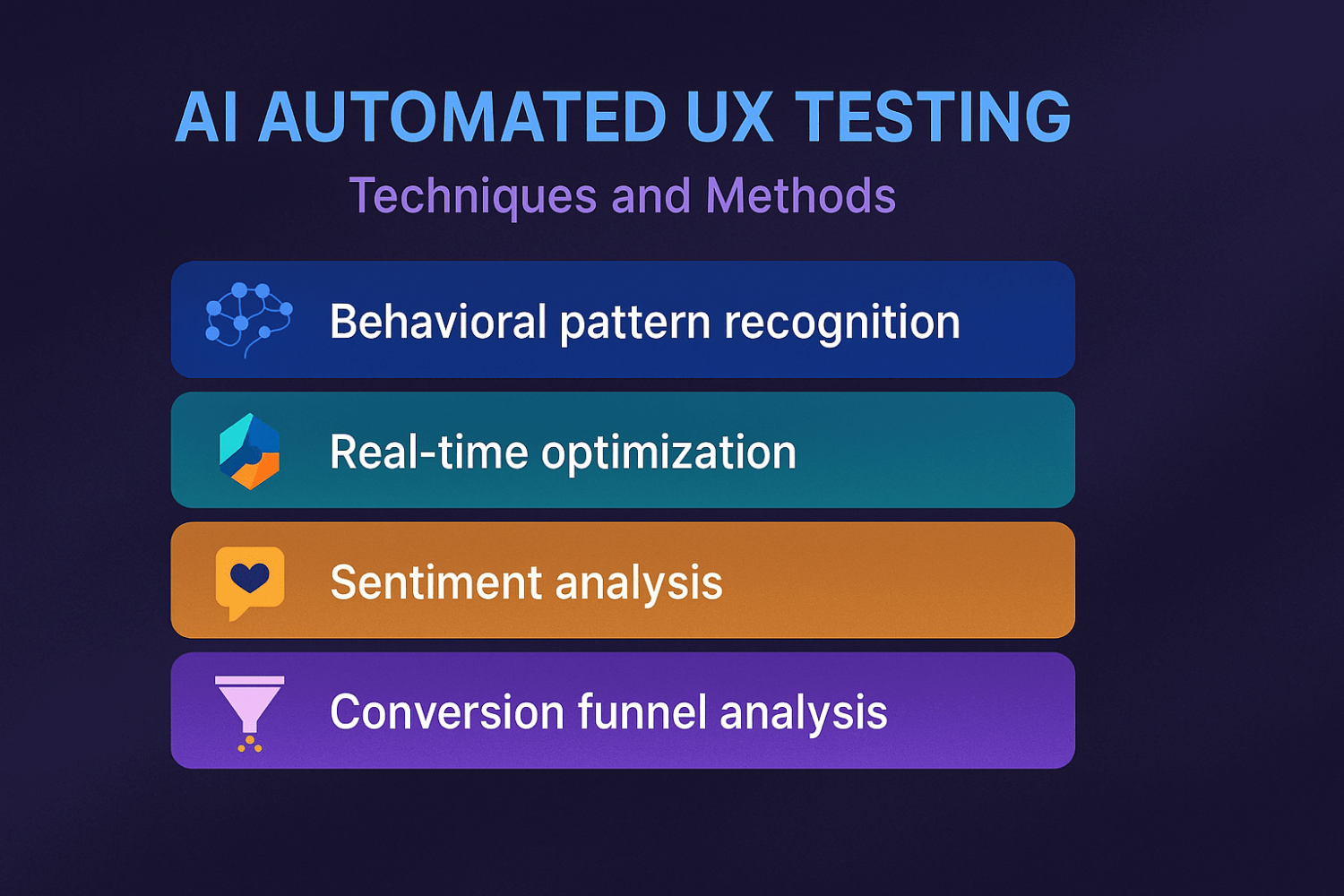

AI testing processes thousands of users, while traditional methods handle dozens. Users behave naturally because they don’t know they’re being watched, and the cost per insight becomes much cheaper once systems are running.

The benefits compound over time:

- Massive scale analysis processes user behavior data that would overwhelm human researchers

- Continuous monitoring provides 24/7 insights without human intervention

- Objective data collection removes human bias from observation and interpretation

- Real-time problem identification catches issues as they happen rather than during scheduled testing cycles

- Global reach analyzes behavior across different time zones, cultures, and demographics

- Pattern recognition spots subtle trends that human observers might miss

Limitation of AI UX testing

But AI testing has a critical weakness: it tells you what users do, but not why they do it. An AI system might spot that users abandon your checkout at step three, but it can’t tell if they’re confused, worried about security, or just comparison shopping. AI systems also struggle with emotional nuance and can’t brainstorm creative solutions like human researchers. They’re great at identifying problems, but terrible at understanding the human psychology behind those problems. Plus, tracking detailed user behavior raises privacy concerns that traditional lab testing simply doesn’t have.

Additional limitations include:

- Missing emotional context about user motivations and frustrations

- Technical complexity requiring specialized knowledge to implement and interpret

- Potential algorithmic bias if training data doesn’t represent diverse user groups

- Limited creative insights compared to human researchers who can think outside established patterns

- Privacy considerations around collecting and storing detailed user behavior data

Key Differences Between Traditional and AI-Based Testing Methods in UX Design

| Aspect | Traditional UX Testing | AI UX Testing |

| Sample Size | 5-20 participants per study | Thousands of users analyzed |

| Timeline | Weeks to months | Real-time to hours |

| Cost per Insight | High due to human resources | Lower once systems are established |

| Data Type | Qualitative insights and context | Quantitative patterns and trends |

| Scalability | Limited by human capacity | Highly scalable across platforms |

| Bias Level | Subject to observer bias | Algorithmic bias potential |

| Emotional Understanding | Deep emotional insights | Limited emotional interpretation |

| Flexibility | High adaptability during sessions | Predetermined analysis parameters |

| Implementation Speed | Slow setup and execution | Fast deployment and results |

Do you need to combine two approaches?

Most successful UX teams don’t choose between traditional and AI testing, they use both strategically. AI excels at identifying problems quickly at scale, while human researchers understand why those problems exist and how to solve them. Smart teams use AI for continuous monitoring, then deploy human researchers to investigate the most critical issues AI identifies. This combination addresses each approach’s weaknesses and enables faster iteration cycles. Instead of waiting weeks for traditional testing results, teams make informed decisions quickly using AI insights, then validate with targeted human research when necessary.

Conclusion

The traditional versus AI debate misses the point entirely. Both approaches solve different parts of the UX testing, and the most effective teams use them together rather than picking sides. Traditional testing provides the human context that AI lacks, while AI provides the scale and speed that traditional methods can’t match. As AI technology improves, the real competitive advantage won’t come from choosing the right testing method; it’ll come from combining both approaches intelligently to create better user experiences faster than anyone else.