We’ve all been there — staring at a blank screen, a vivid scene playing out in our minds, yet paralyzed by the sheer logistical mountain of bringing it to life. You see the rain-slicked streets of a cyberpunk Tokyo, you hear the hum of neon lights, you feel the tension of a cinematic chase. But then reality sets in: the cost of equipment, the complexity of 3D rendering software, the steep learning curve of video editing.

For years, the gap between imagination and reality was bridged only by budget and technical expertise. If you couldn’t afford a production crew or didn’t spend years mastering Blender, your ideas stayed just that — ideas.

But recently, the ground has shifted. The barrier to entry isn’t just lowering; it’s dissolving.

I remember the first time I typed a simple sentence into a generative video model. I wasn’t expecting much — maybe a glitchy, surreal GIF that vaguely resembled my prompt. Instead, what materialized was a coherent, textured scene that felt directed, not just computed. It was a moment of clarity: we are moving from an era of capturing light to an era of synthesizing it.

This is where tools like Sora 2 AI Video enter the narrative. They aren’t just “video makers”; they are translation engines, turning the language of your thoughts into the language of cinema.

The Physics of Imagination: Beyond the “Glitch”

A New Kind of Realism

When we talk about AI video, the conversation often gets stuck on “deepfakes” or novelty. But the real story is about physics.

In my early explorations with older generative models, the laws of nature were often… suggestions. Water flowed upwards, shadows detached from their objects, and people seemed to glide rather than walk. It was impressive, but it broke the immersion. It felt like a dream — unstable and fleeting.

However, the latest iteration of generative technology, particularly the architecture behind OpenAI’s Sora 2 (which powers platforms like Supermaker), seems to have gone to “film school.”

In my recent tests, I noticed a distinct shift. When I prompted a scene involving a glass shattering, the shards didn’t just vanish or morph; they followed a trajectory that felt weighty and real. When I described a character walking through snow, the footprints left behind were consistent.

This isn’t just about better graphics; it’s about temporal consistency. The model understands that if an object exists in frame 1, it must still exist in frame 100, unless acted upon. This “object permanence” is what separates a trippy visualizer from a usable narrative tool.

The Sound of Silence (No More)

One of the most jarring aspects of early AI video was the silence. You’d get a beautiful, stormy ocean scene, but it was mute. The disconnect between the visual violence of a wave and the dead silence of your speakers shattered the illusion instantly.

What I found compelling about the Supermaker integration is the synchronization of audio. It’s a subtle but critical layer. When a dog barks in the video, you hear it. When a car speeds by, the doppler effect is present. It transforms the output from a “moving image” into a “video clip.”

The Creative Workflow: From Friction to Flow

Let’s look at how this actually changes your day-to-day creative process.

Traditionally, video production is a linear, subtractive process. You shoot a lot of footage, and then you cut away what you don’t need. You are limited by what you captured on the day of the shoot.

Generative video is an additive, iterative process. You start with nothing and build exactly what you need.

The “What If” Engine

Imagine you are a brand strategist pitching a concept for a perfume ad.

- Old Way: You scour stock footage sites for “woman running in field,” “sunset,” and “elegant bottle.” You stitch them together with a generic track. It looks like a mood board, disjointed and borrowed.

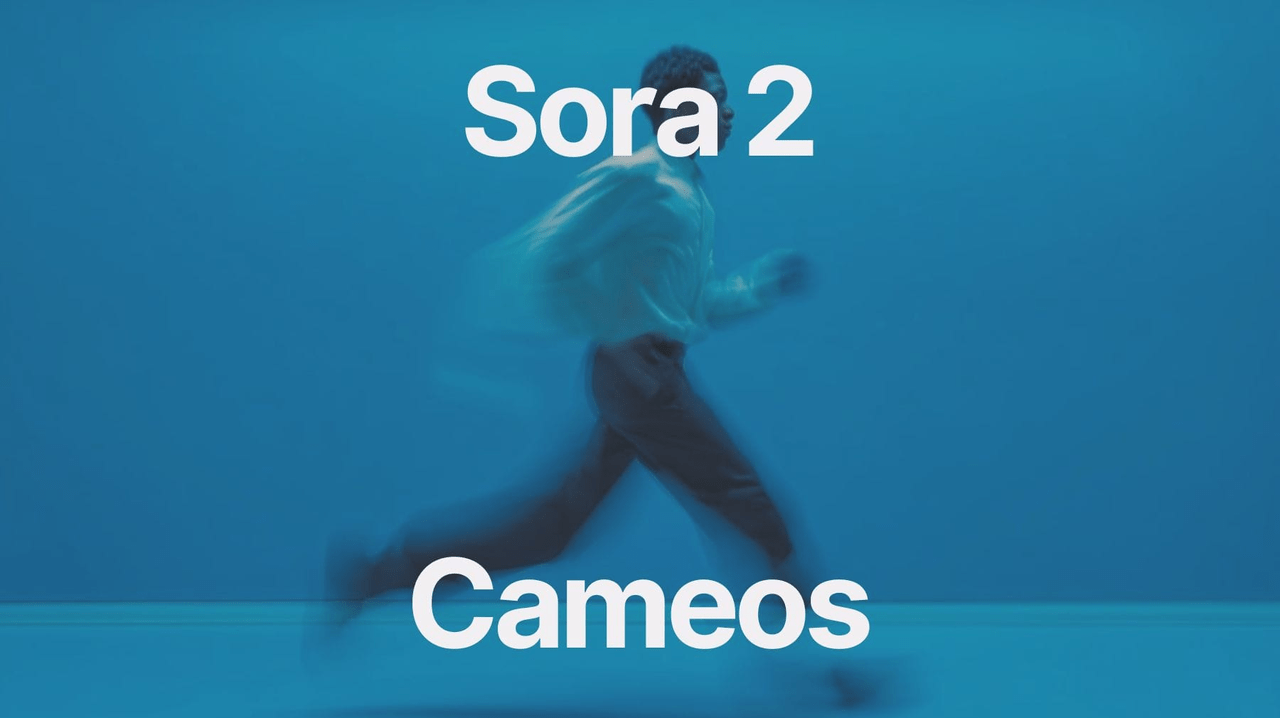

- New Way: You open the Sora AI Video tool. You type: “Cinematic close-up of an amber perfume bottle resting on moss, golden hour lighting, soft focus background, particle effects floating in the air.”

Within moments, you have a bespoke clip. It’s not “close enough”; it’s exactly what you described.

If the lighting is too harsh? You don’t need to re-shoot. You just tweak the prompt: “Soft, diffused moonlight.”

This ability to iterate instantly allows you to explore creative avenues that would be too risky or expensive in the physical world. You can test a “noir” style, then a “cyberpunk” style, then a “watercolor” style, all before lunch.

A Comparative Lens: The Evolution of Video Tools

To truly understand where we stand, it helps to visualize the leap from traditional stock assets to generative models.

| Feature | Traditional Stock Footage | Sora AI Video Generation |

| Origin | Captured by a camera in the past. | Synthesized in the present. |

| Flexibility | Fixed. You cannot change the camera angle or lighting of a stock clip. | Fluid. You control the weather, the mood, and the action. |

| Cost Model | Pay-per-clip or expensive subscriptions. | Credit-based generation. Generally lower cost per unique asset. |

| Exclusivity | Low. Your competitor might use the same clip. | High. Your video is unique to your specific prompt. |

| Audio | Often silent or requires separate sound design. | Integrated. Synchronized audio generated with the video. |

| Physics | Real-world constraints (gravity, weather). | Simulated Reality. Can defy physics if requested (e.g., floating cities). |

The “Uncanny Valley” Bridge

This table highlights a key advantage: Exclusivity. In a saturated content market, using the same stock footage as everyone else is a brand killer. Generative video offers a way to own your visual identity without the Hollywood budget.

Navigating the Fog: Honest Limitations

It would be disingenuous to present this technology as a magic wand that replaces a film crew tomorrow. As with any emerging tech, there are rough edges that a prudent creator must navigate.

1. The “Gacha” Element

In my experience, working with AI video can sometimes feel like pulling the lever on a slot machine. You might use a perfect prompt and get a result where a hand has six fingers or a car drives sideways. It requires patience. You are not “directing” in the traditional sense; you are “coaxing.” You might need to generate a clip three or four times to get the one that feels right.

2. The Duration Constraint

Currently, we are looking at short-form content. We are generating clips, not movies. While the technology is rapidly expanding to longer durations, maintaining coherence over a 10-minute narrative is still a challenge. The AI can “forget” what the character looked like at the start of the scene if the clip runs too long.

3. The “Dream Logic”

While the physics engine is vastly improved, it can still hallucinate. Text on signs might be gibberish. Background characters might morph into lamp posts. These artifacts are becoming rarer, but they are the tell-tale signs of synthetic media.

4. The Human Touch

AI can simulate emotion, but it cannot (yet) replicate the nuanced micro-expressions of a seasoned actor delivering a heartbreaking line. For high-drama narrative work, human performance is still king.

The Verdict: A Tool for the Visionary

So, is AI Video Generator Agent going to replace filmmakers? No.

Is it going to replace the drudgery of filmmaking? Absolutely.

It is best viewed not as a replacement, but as a force multiplier. It allows a single creator to punch way above their weight class. It allows a small business to have “big brand” visuals. It allows a writer to finally show, not just tell.

We are standing at the precipice of a new visual language. The cameras of the future don’t have lenses; they have text boxes. And for the first time in history, the only limit to what you can show the world is the vocabulary you use to describe it.

The director’s chair is no longer empty. It’s waiting for you.

⸻ Author Bio ⸻

Oliver Nichols — A music educator, teaching students of all ages, inspiring creativity, technique, and confidence through engaging lessons.

⸻

All images and graphics in this article are provided courtesy of the author. Cover photo by Freepik.